|

I am a PhD candidate at Causal Learning and Artificial Intelligence Lab, IST Austria supervised by Francesco Locatello. My research focuses on compositional generalization and out-of-distribution detection. I study the structure of learned latent spaces, leveraging the geometry and statistical properties of encoded features to better understand and improve generalization. I have recently become interested in virtual cell modeling through generative approaches to study how well generative models generalize. I am co-affiliated with the Chan Zuckerberg Initiative's AI for Science program, which supports my PhD research. Prior to beginning my PhD, I earned a master's degree from Sabanci University. During this time, I was a member of the VPA Lab and was under the supervision of Asst. Prof. Huseyin Ozkan and Asst. Prof. Erchan Aptoula. The primary focus of my master's studies was on group activity recognition and domain generalization. In the summer of 2019, I completed a summer internship at Erasmus University Rotterdam. Email / CV / GitHub / Google Scholar / Twitter |

|

|

|

|

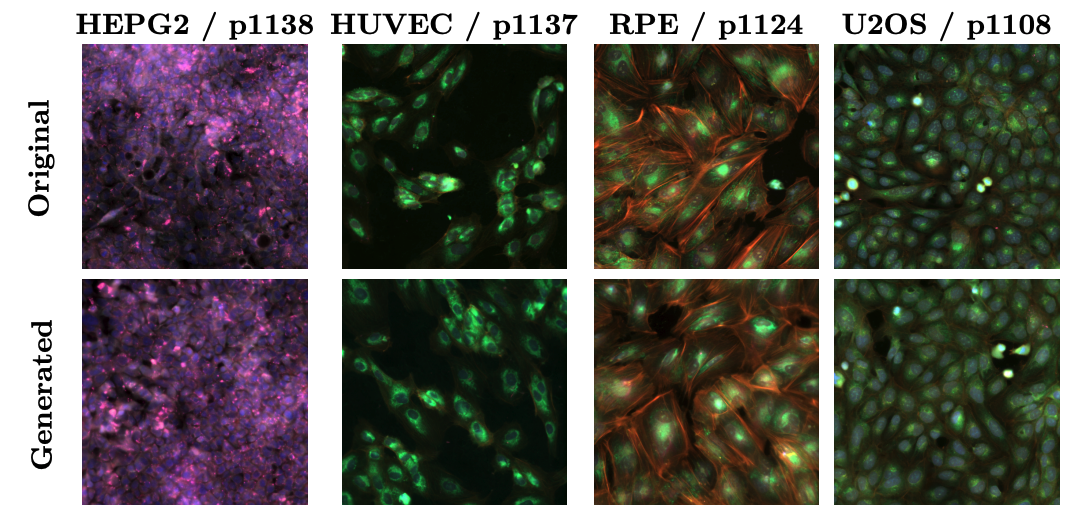

Berker Demirel, Marco Fumero, Theofanis Karaletsos, Francesco Locatello arXiv:2510.01298, 2024 pdf / code / bib We present MorphGen, a generative model designed for fluorescent microscopy, enabling controllable and biologically consistent image generation across various cell types and perturbations. By leveraging a diffusion-based approach and aligning with phenotypic embeddings from OpenPhenom, MorphGen preserves detailed organelle-specific structures across multiple fluorescent channels. This capability supports fine-grained morphological analysis, advancing applications in drug discovery and gene editing. |

|

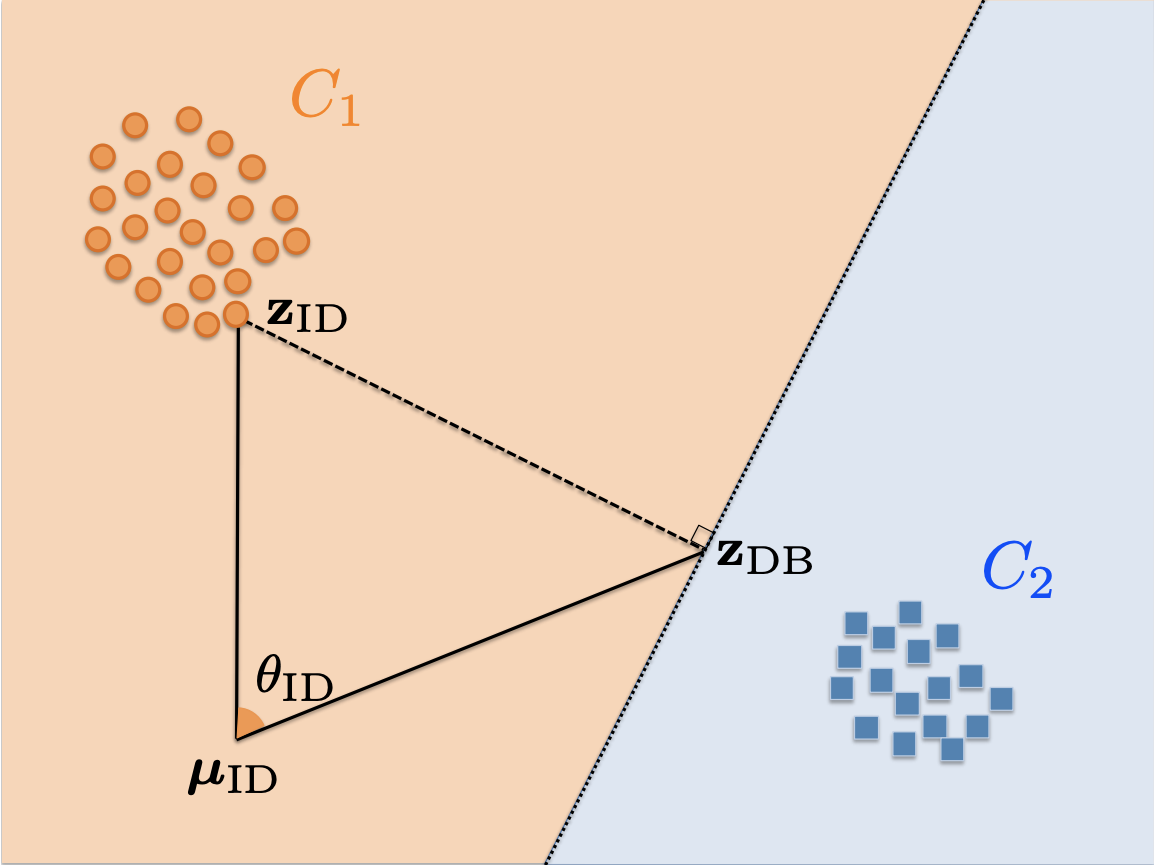

Berker Demirel, Marco Fumero, Francesco Locatello NeurIPS, 2025 pdf / code / bib We present a novel technique Out-of-Distribution Detection with Relative Angles (ORA), which computes the angles between the feature representation and its projection to the decision boundaries, relative to the mean of ID-features. ORA is model-agnostic, hyperparameter-free, and efficient, scaling linearly with the number of ID-classes. Therefore, it can flexibly be combined with various architectures without the need for additional tuning. In addition, the scale-invariant property of ORA allows for straightforward aggregation of confidence scores from multiple pre-trained models, improving ensemble performance for OOD detection. |

|

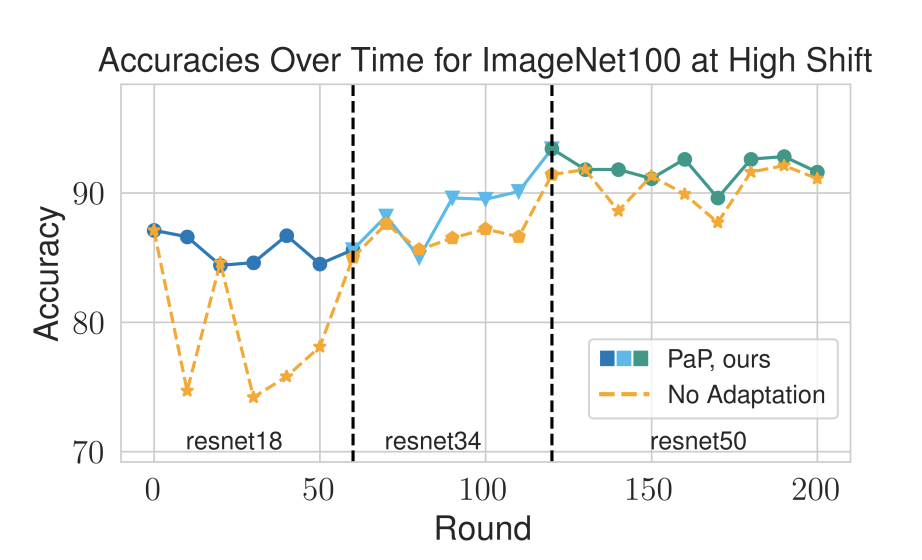

Berker Demirel, Lingjing Kong, Kun Zhang, Theofanis Karaletsos, Celestine Mendler-Dünner, Francesco Locatello arXiv:2410.04499, 2024 pdf / code / bib We propose a modular approach to tackle performative label shift for pretrained backbones. This additional module serves two main use cases: (i) adapting the model for performative shift and (ii) making informed model selection by anticipating future distributions caused by multiple models. For the first use case, it allows pre-shift adaptation for networks to better handle performative shifts. For the second, it can anticipate a model's robustness to performative shifts, enabling more informative model selection. Thanks to our modeling approach capturing the inherent relationship between the sufficient statistic and the performative shift, it is not coupled with the specific architecture it is trained with. Therefore, it can seamlessly combine with various pretrained networks, allowing zero-shot transfer during model updates. |

|

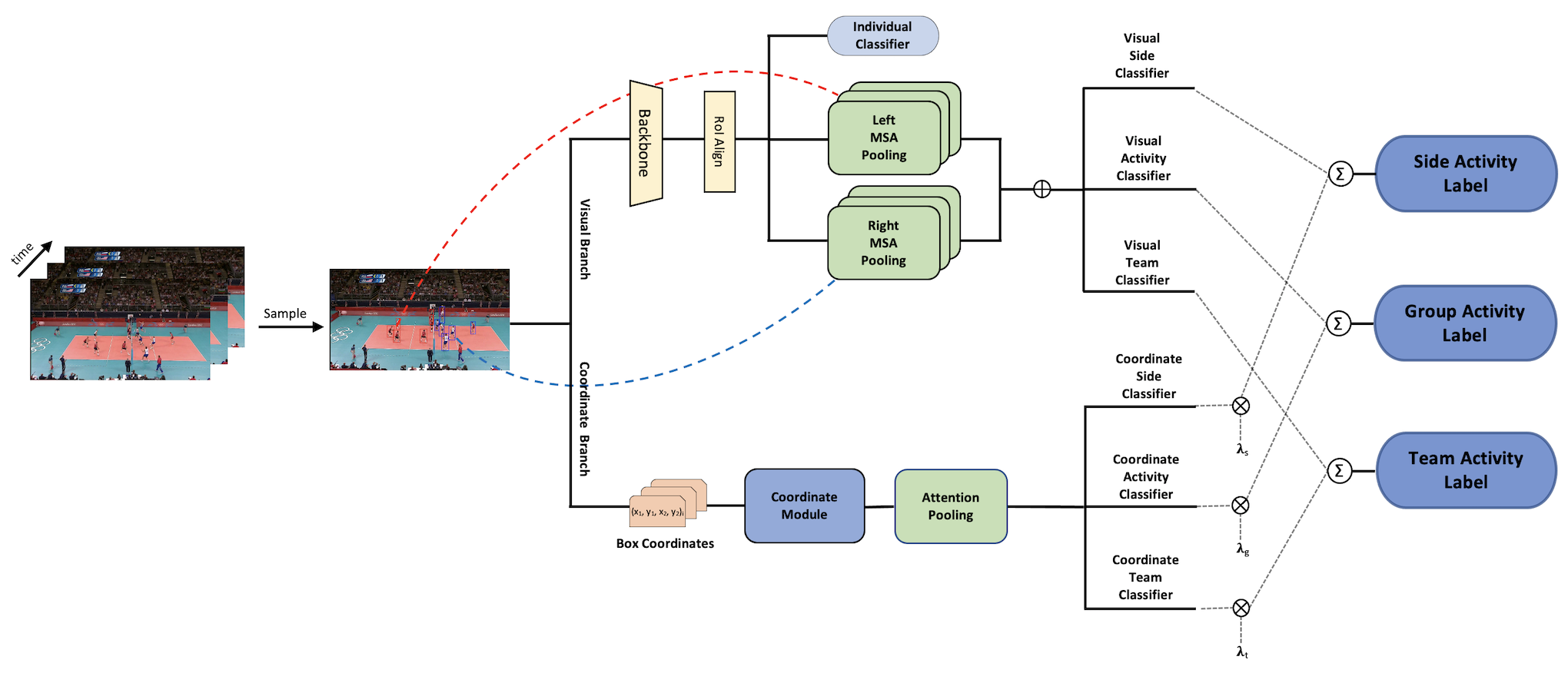

Berker Demirel, Huseyin Ozkan IEEE International Conference on Image Processing (ICIP), 2024 pdf / code / bib We show that Group Activity Recognition Problem can be formulated using Attention Pooling mechanism and can perform on par with the other state-of-the-art methods even with a single RGB frame. Moreover, we manually reannotated the flawed instances in the Volleyball Dataset, which is one of the widely used datasets in Group Activity Recognition. |

|

Berker Demirel, Erchan Aptoula, Huseyin Ozkan arXiv:2308.06624, 2023 pdf / code / bib We propose an additive disentanglement of domain specific and domain invariant features for the domain generalization problem. Unlike prior work, we demonstrate the potential benefits of utilizing domain specific features along with domain invariant ones. Moreover, we introduce a new data augmentation technique to enhance the generalization capacity of the architecture, where samples from different domains are mixed within the latent space. |

|

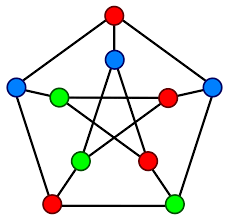

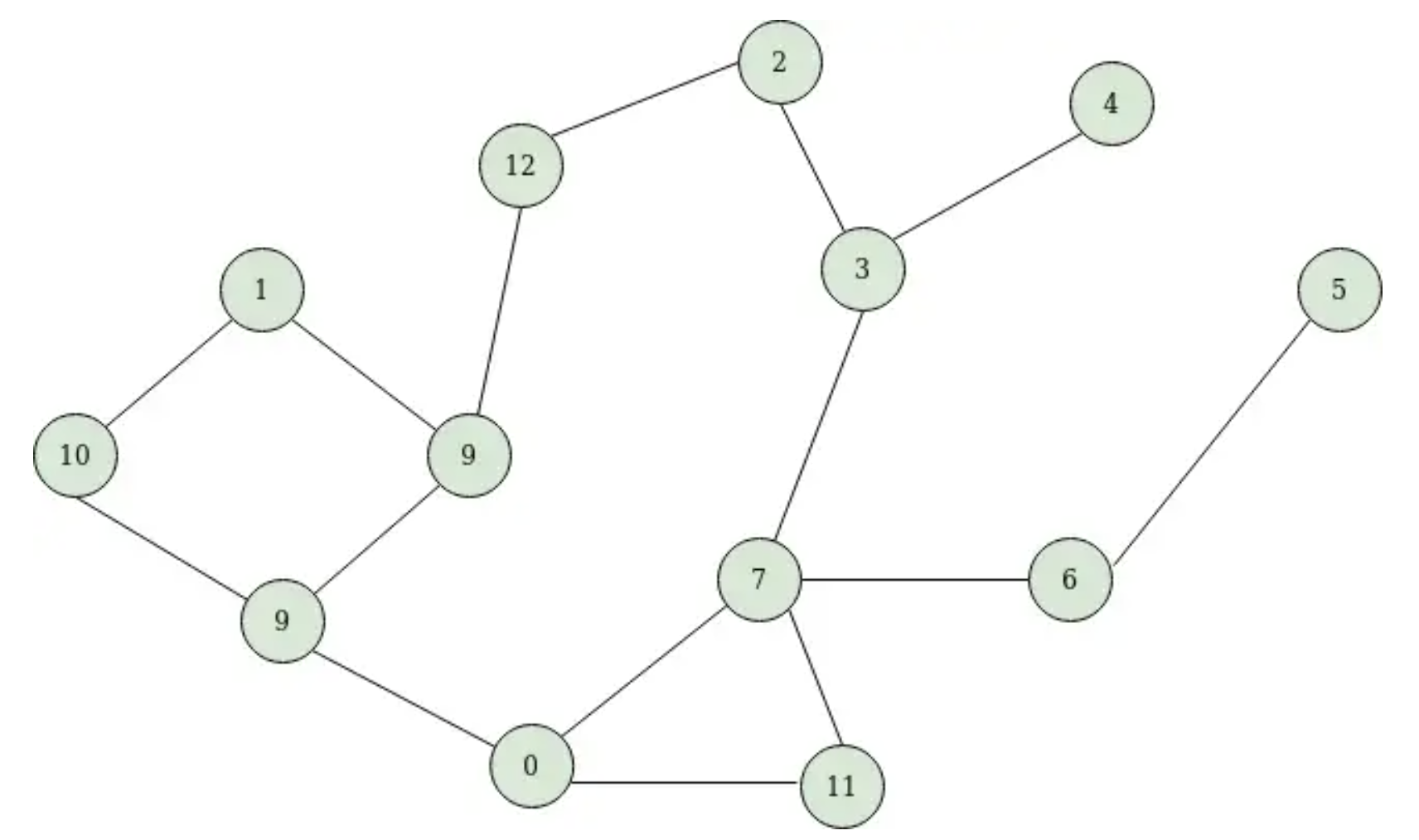

Berker Demirel, Arda Asik, Bugra Demir, Kamer Kaya, Baris Batuhan Topal IEEE Signal Processing and Communications Applications (SIU), 2020 pdf / code / bib We developed a ranking algorithm that uses metrics of degree 1/2/3, closeness centrality, clustering coefficient, and page rank to rank the nodes in a graph. Then, we applied greedy coloring to the graph using the ranking as a guide, which resulted in significantly better colorings. Furthermore, we tried to extend this idea using a model-free policy based reinforcement learning algorithm while parallelizing C++ backend using OpenMP. |

|

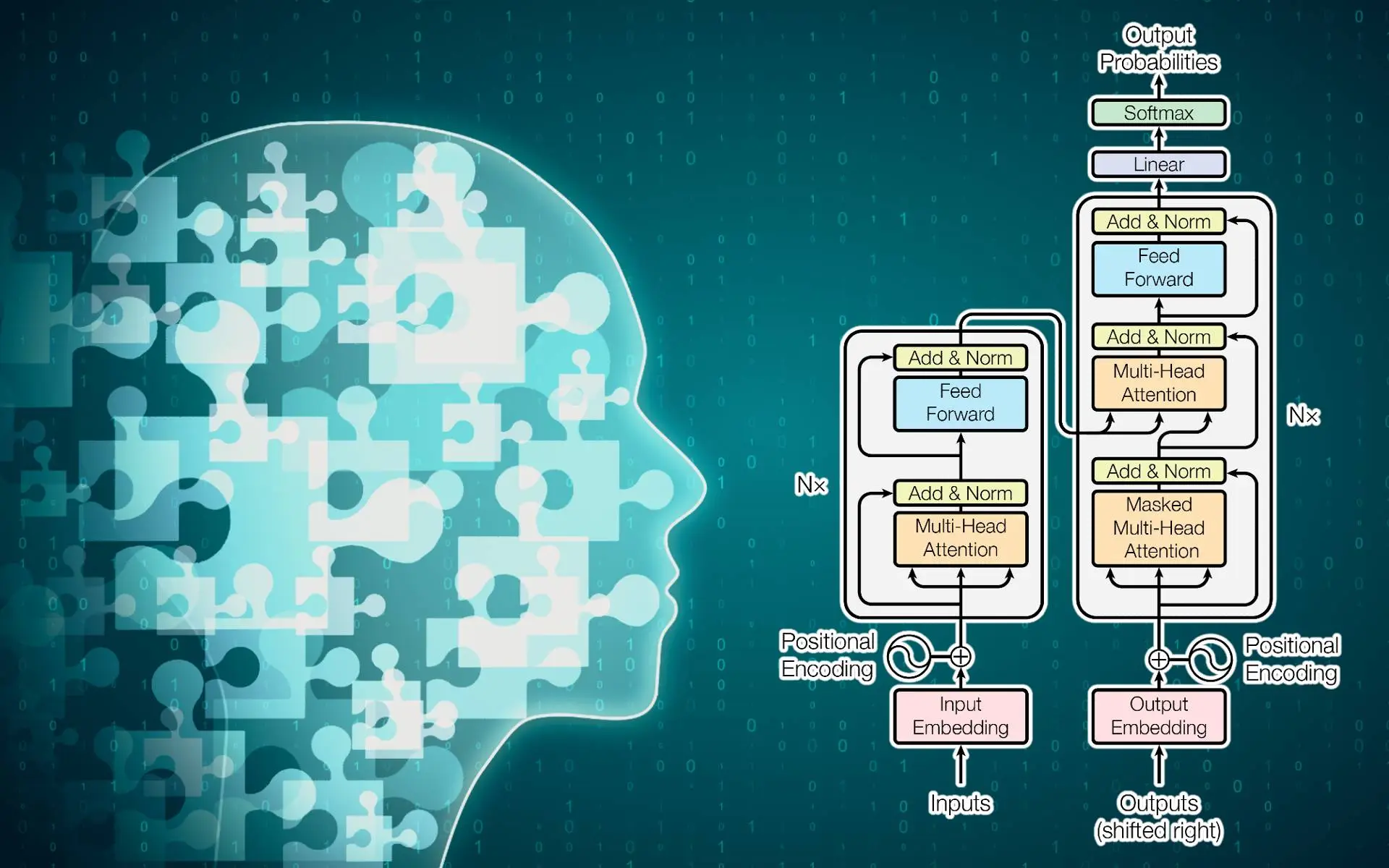

Berker Demirel pdf / code I re-implemented Andrej Karpathy's nanoGPT. It is designed to be simpler and easier to update. Performance evaluation is performed on Tiny Shakespeare Dataset and results can be found in the repository. |

|

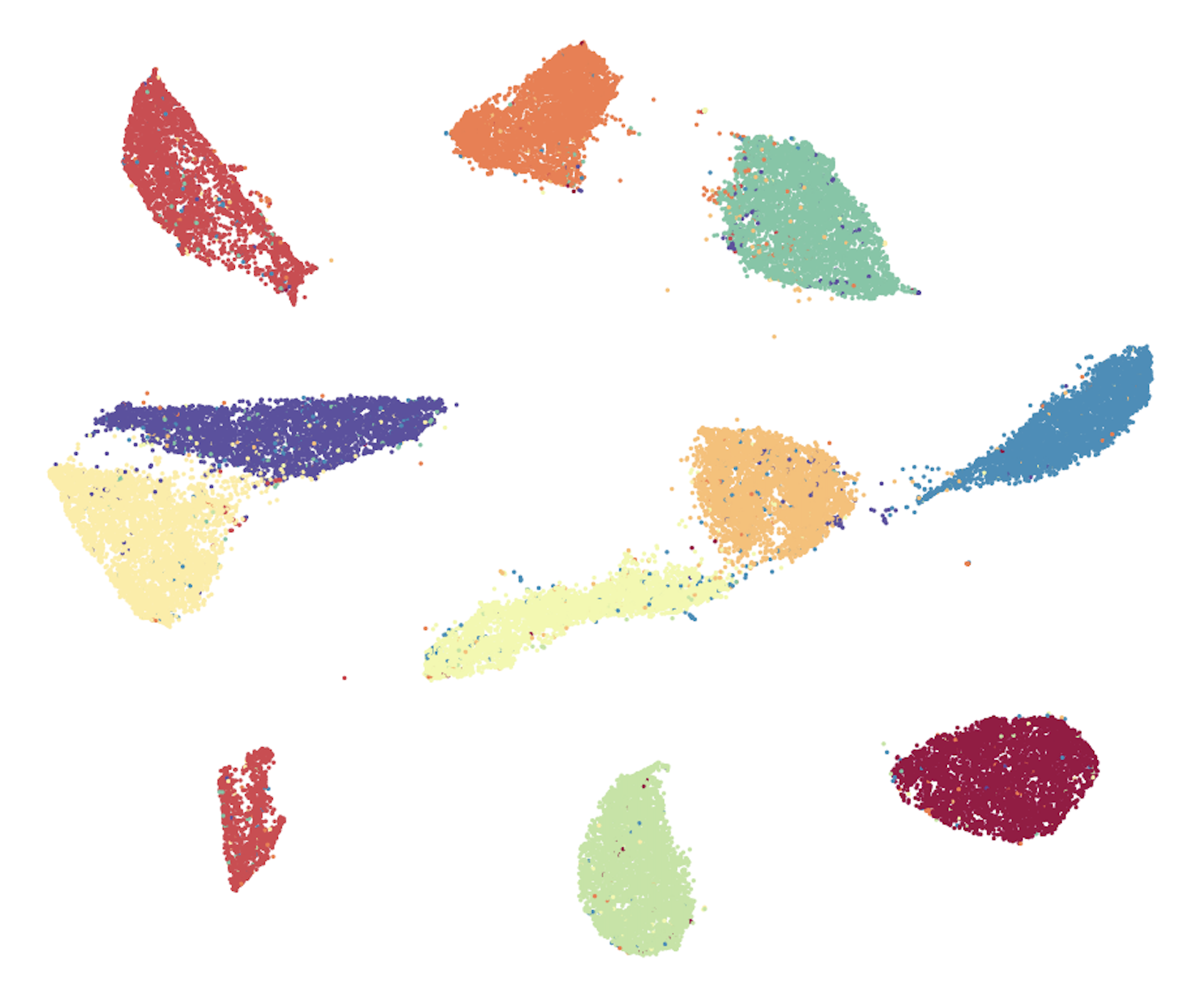

Berker Demirel pdf / code I implemented Uniform Manifold Approximation and Projection (UMAP) algorithm in Python from scratch and performed experiments on MNIST and Load Digits datasets. |

|

Berker Demirel, Naci Ege Sarac pdf / code We implemented Direction-Optimized BFS using OpenMP and CUDA. |

|

Website template credits to Jon Barron. |